What is Bernoulli Distribution?

Published by

sanya sanya

Probability distributions are essential mathematical tools used to model and understand random variables and their outcomes. One such distribution is the Bernoulli distribution, named after the Swiss mathematician Jacob Bernoulli. The Bernoulli distribution is a fundamental concept in probability theory and serves as the building block for more complex distributions. In this blog, we will explore the Bernoulli distribution, its characteristics, and its applications in various fields.

Definition of Bernoulli Distribution

The Bernoulli distribution is a discrete probability distribution that models a random variable with two possible outcomes, often referred to as "success" and "failure." It is commonly used to represent binary experiments or trials where the outcome can be classified as a success or a failure. The distribution is defined by a single parameter, p, which represents the probability of success.

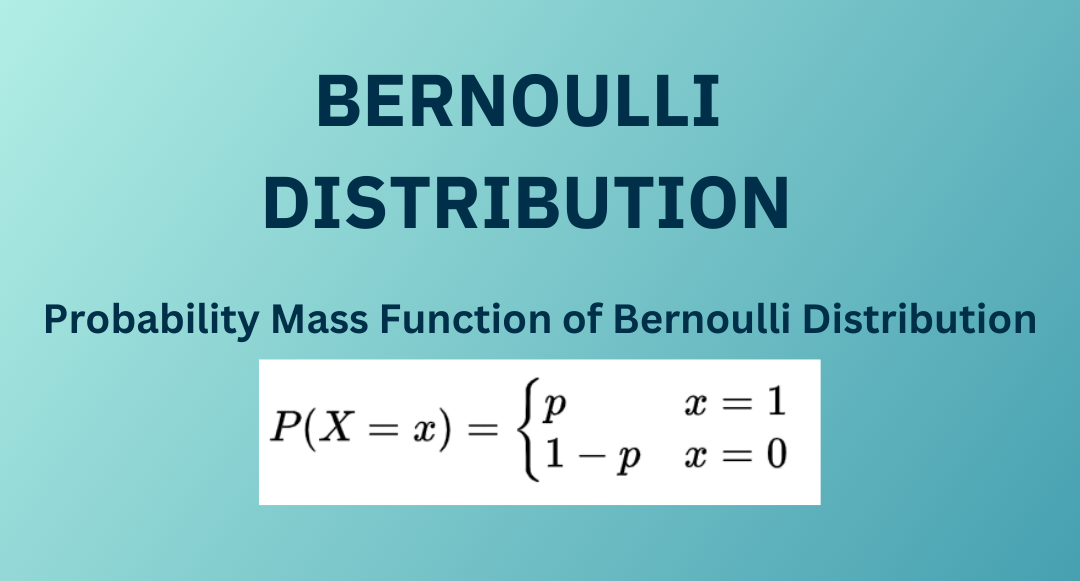

Let's denote the random variable as X, which follows a Bernoulli distribution. The probability mass function (PMF) of X can be defined as follows:

P(X = 1) = p, for a success

P(X = 0) = 1 - p, for a failure

Here, P(X = 1) represents the probability of a success, and P(X = 0) represents the probability of a failure. The probabilities must satisfy 0 ≤ p ≤ 1 since they represent probabilities.

Expected Value and Variance:

The expected value, or mean, of a Bernoulli distribution is given by

E(X) = p,

which is simply the probability of success. The variance of the distribution is given by

Var(X) = p(1 - p)

The expected value indicates the average outcome of a Bernoulli trial, while the variance measures the spread or dispersion of the distribution. As p approaches 0.5, the variance is maximized, resulting in a more uncertain or unpredictable outcome.

Applications:

The Bernoulli distribution finds applications in various domains, including:

-

Coin Flips: Modeling the outcome of a fair or biased coin flip, where heads can be considered a success and tails a failure.

-

Binary Classification: In machine learning, the Bernoulli distribution can be used to model binary outcomes, such as predicting whether an email is spam or not, or classifying an image as containing a specific object or not.

-

Risk Analysis: In finance and insurance, the Bernoulli distribution can be used to model binary events, such as the occurrence of a credit default or an insurance claim.

-

Quality Control: Assessing the success or failure of a manufactured product based on specific criteria, such as whether it meets certain specifications or quality standards.

-

Surveys and Polling: Analyzing responses to binary survey questions, such as yes/no or agree/disagree questions, to estimate the proportion of the population that falls into each category.

-

Genetics: Modeling the inheritance of traits, where the outcomes can be classified as either exhibiting the trait or not.

Understanding Through Example

Let's consider a simple example of tossing a fair coin. The outcome of this experiment can be classified as a success (heads) or a failure (tails). We can model this scenario using a Bernoulli distribution.

In this case, the parameter p, which represents the probability of success (getting heads), is 0.5 since we assume the coin is fair.

Now, let's calculate the probabilities for a few scenarios:

-

What is the probability of getting heads (success) in a single coin toss?

P(X = 1) = p = 0.5

The probability of success (getting heads) in a single toss is 0.5.

-

What is the probability of getting tails (failure) in a single coin toss?

P(X = 0) = 1 - p = 1 - 0.5 = 0.5

The probability of failure (getting tails) in a single toss is also 0.5.

-

If we toss the coin three times, what is the probability of getting exactly two heads?

P(X = 2) = (0.5)^2 * (1 - 0.5)^(3 - 2) = 0.25 * 0.5 = 0.125

The probability of getting two heads (successes) in three tosses is 0.125.

-

If we toss the coin four times, what is the probability of getting at least three heads?

P(X = 3) = (0.5)^3 * (1 - 0.5)^(4 - 3) = 0.125

P(X = 4) = (0.5)^4 * (1 - 0.5)^(4 - 4) = 0.0625

P(at least 3 heads) = P(X = 3) + P(X = 4) = 0.125 + 0.0625 = 0.1875

The probability of getting at least three heads (successes) in four tosses is 0.1875.

In these calculations, we use the Bernoulli distribution's probability mass function (PMF) formula, where p represents the probability of success and (1 - p) represents the probability of failure. By plugging in the values, we can calculate the probabilities for different outcomes.

This example illustrates how the Bernoulli distribution can be used to model simple binary experiments, such as coin flips, and calculate the probabilities associated with different outcomes.

The Bernoulli distribution is a simple yet powerful tool for modeling binary outcomes in various fields. It allows us to understand the probability of success or failure in a single trial and provides insights into the expected value and variance. By utilizing the Bernoulli distribution, we can analyze and make predictions about real-world phenomena involving binary events. Its applications extend to diverse areas such as statistics, machine learning, finance, and genetics. Understanding the Bernoulli distribution lays a foundation for exploring more complex probability distributions and statistical models.

Library

WEB DEVELOPMENT

FAANG QUESTIONS